A while ago I made an example of how to use TensorFlow models in Unity using TensorFlow Sharp plugin: TFClassify-Unity. Image classification worked well enough, but object detection had poor performance. Still, I figured it can be a good starting point for someone who needs this kind of functionality in Unity app.

Unfortunately, Unity stopped supporting TensorFlow and moved to their own inference engine code-named Barracuda. You can still use the example above, but the latest plugin was built with TensorFlow 1.7.1 in mind and anything trained with higher versions might not work at all. The good news is that Barracuda should support ONNX models from the box and you can convert your TensorFlow model to the supported format easy enough. Bad news is that Barracuda is still in preview and there are some caveats.

Differences

With TensorFlow Sharp plugin, my the idea was to take TensorFlow example for Android and make a similar one for Unity using the same models, which is inception_v1 for image classification and ssd_mobilenet_v1 for object detection. I had successfully tried mobilenet_v1 architecture as well - it's not in the example, but all you need is to replace input/output names and std/mean values.

With Barracuda, things are a bit more complicated. There are 3 ways to try certain architecture in Unity: use ONNX model that you already have, try to convert TensorFlow model using TensorFlow to ONNX converter, or to try to convert it to Barracuda format using TensorFlow to Barracuda script provided by Unity (you'll need to clone the whole repo to use this converter, or install it with pip install mlagents).

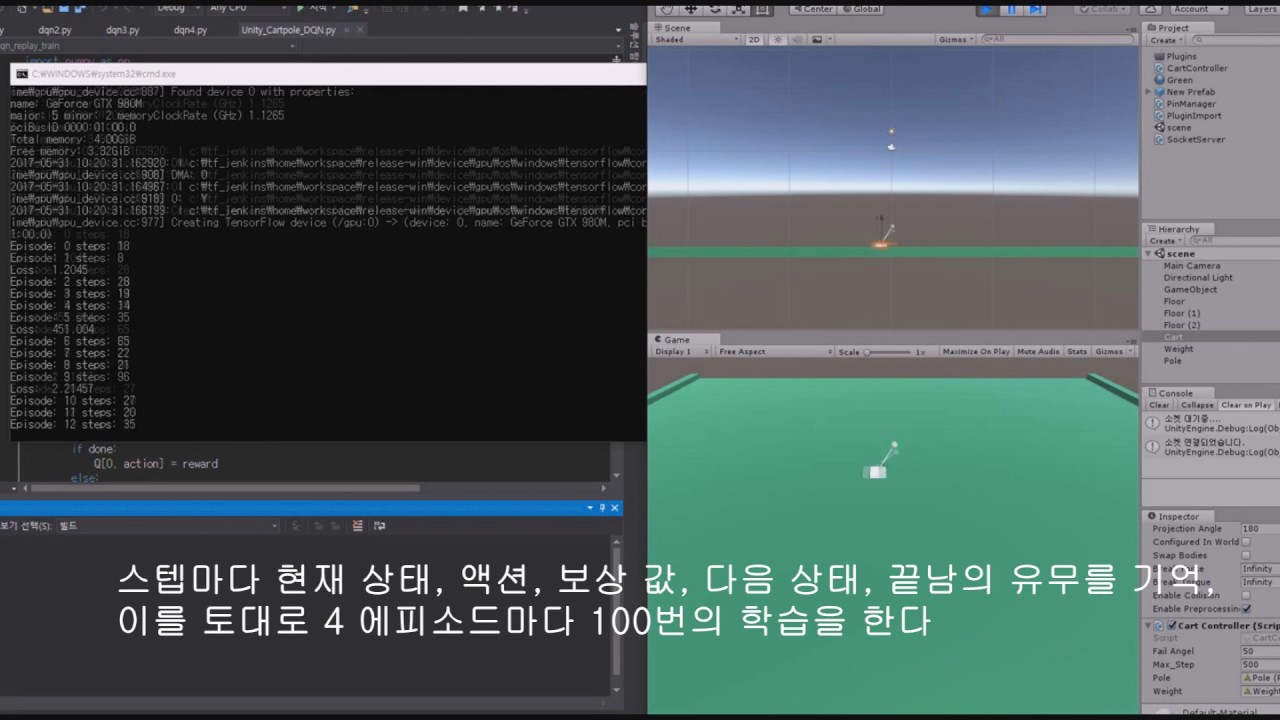

TensorFlow makes it easy for beginners and experts to create machine learning models for desktop, mobile, web, and cloud. A laptop with TensorFlow, Unity, and Docker installed (Downloads will be supplied in advance with simple instructions.) Check out the installation instructions. What you'll learn. Learn how to use a game engine to test out and explore ML and AI problems that have a visual component (e.g., self-driving cars, real-world interactions, etc.).

1 every application works with data. Both unity and tensorflow work with data. Tensorflow is just a machine learning library that basically is made for python language. You dont need to find a wrapper to let you work with tensorflow but i think there is some. Using the Unity Inference Engine (Barracuda), you can deploy your ML-Agents models on any platform (PC, mobile or console) that is supported in Unity. Extensible training of agents Access to C#, communication protocol, and a low-level Python API that gives you the flexibility to try different algorithms and methods for training agents enriches.

None of those things worked for me with inception and ssd-mobilenet models. There were either problems with converting, or Unity wouldn't load the model complaining about not supported tensors, or it would crash or return nonsensical results during inference. Pre-trained inception ONNX seemed like it really wanted to get there, but craping out on the way with either weird errors or weird results (perhaps someone else will have more luck with that one).

But some things did work.

Image classification

MobileNet is a great architecture for mobile inference since, as it goes from its name, it was created exactly for that. It's small, fast and there are different versions that provide a trade-off between size/latency and accuracy. I didn't try latest mobilenet_v3, but v1 and v2 are working great both as ONNX and after tf-barracuda conversion. If you have .onnx model - you're set, but if you got .pb (TensorFlow model in protobuf format), the conversion is easy enough using the tensorflow-to-barracuda converter:

Converter figures out inputs/outputs itself. Those are good to keep around in case you need to modify them in code later. Here I have input name 'input' and output name 'MobilenetV2/Predictions/Reshape_1'. You can also see those in Unity editor when you choose this model for inspection. One thing to note: with mobilenet_v2, the converter and Unity inspector shows wrong input dimensions - it should be [1, 224, 224, 3] instead, but this doesn't seem to matter in practice.

Then you can load and run this model using Barracuda as described in the documentation:

The important thing that has to be done with the input image to make inference work is normalization, which means shifting and scaling down pixel values so that they go from [0;255] range to [-1;1]:

Barracuda actually has a method to create a tensor from Texture2D, but it doesn't accept parameters for scaling and bias. That's weird since it is often a necessary step before running inference on an image. Although be careful trying your own models - some of them might actually have scaling a bias layers as part of the model itself, so be sure to inspect it in Unity before using.

Object detection

There seem to be 2 object detection architectures that are currently used most often: SSD-MobileNet and YOLO. Unfortunately, SSD is not yet supported by Barracuda (as stated in this issue). I had to settle on YOLO v2, but originally YOLO is implemented in DarkNet and to get either Tensorflow or ONNX model you'll need to convert darknet weights to necessary format first.

Fortunately, already converted ONNX models exist, however, full network seemed like way too huge for mobile inference, so I chose Tiny-YOLO v2 model available here (opset version 7 or 8). But if you already have a Tensorflow model, then tensorflow-to-barracuda converter works just as well, in fact I have one in also works folder in my repository that you can try.

Funny enough, ONNX model already has layers for normalizing image pixels, except that they don't appear to actually do anything because this model doesn't require normalization and works with pixels in [0;255] range just fine.

The biggest pain with YOLO is that its output requires much more interpretation than SSD-Mobilenet. Here is the description of Tiny-YOLO output from ONNX repository:

'The output is a (125x13x13) tensor where 13x13 is the number of grid cells that the image gets divided into. Each grid cell corresponds to 125 channels, made up of the 5 bounding boxes predicted by the grid cell and the 25 data elements that describe each bounding box (5x25=125).'

Yeah. Fortunately, Microsoft has a good tutorial on using ONNX models for object detection with .NET where we can steal o lot of code from, although with some modifications.

Let's not block things

My TensorFlow Sharp example was pretty dumb in terms of parallelism since I simply run inference once a second in the main thread, blocking the camera from playing. There were other examples showing a more reasonable approach like running model in a separate thread (thanks MatthewHallberg).

However, running Barracuda in a separate thread simply didn't work, producing some ugly looking crashes. Judging by documentation Barracuda should be asynchronous by default, scheduling inference on GPU automatically (if available), so you simply call Execute() and then query the result sometime later. In reality, there are still caveats.

So Barracuda worker class has 3 methods that you can run inference with: Execute(), ExecuteAsync() and an extension method ExecuteAndWaitForCompletion(). The last one is obvious: it blocks. Don't use it unless you want your app to freeze during the process. Execute() method works asynchronously, so you should be able to do something like this:

...or query output in a different method entirely. However, I've noticed that there is still a slight delay even if you just call Execute() and nothing else, causing camera feed to jitter slightly. This might be less noticeable on newer devices, so try before buy.

ExecuteAsync() seems like a very nice option to run inference asynchronously: it returns an enumerator which you can run with StartCoroutine(worker.ExecuteAsync(inputs)). However, internally this method does yield return null after each layer, which means executing one layer per frame, which, depending on amount of layers in your model and complexity of operations in them, might just be too often and cause the model execute much slower than it can (as I found out is the case with mobilenet model for image classification). YOLO model does seem to work better with ExecuteAsync() than other methods, although amount of devices I can test it on is quite limited.

Playing around with different methods to run a model, I found another possibility: since ExecuteAsync() is an IEnumerator, you can iterate it manually, executing as many layers per frame as you want:

That's a bit hacky and totally not platform-independent - so judge yourself, but I found it actually work better for mobilenet image classification model, causing minimum lag.

Conclusion

Once again, Barracuda is still in early development, a lot of things change often and radically. Like my example being tested with Barracuda 0.4.0-preview version of the plugin, and 0.5.0-preview already breaks it, making object detection produce wrong results (so make sure to install 0.4.0 if you're gonna try the example, and I'll be looking into newer versions later). But I think that an inference engine that works cross-platform without all the hassle with TensorFlow versions, supports ONNX from the box and baked into Unity is a great development.

So does Barracuda example give better performance than TensorFlow Sharp one? It's hard to compare, especially with object detection. Different architectures are used, Tiny-YOLO has a lot fewer labels than SSD-Mobilenet, TFSharp example faster with OpenGLES while Barracuda works better with Vulcan, async strategies are different and it's not clear how to get best async results from Barracuda yet. But comparing image classification with MobileNet v1 on my Galaxy S8, I got following inference times running it synchronously: ~400ms for TFSharp with OpenGLES 2/3 vs ~110ms for Barracuda with Vulcan. But what's more important is that Barracuda will likely be developed, supported and improved for years to come, so I fully expect even bigger performance gains in the future.

I hope this article and example will be useful for you and thanks for reading!

Check out complete code on my github: https://github.com/Syn-McJ/TFClassify-Unity-Barracuda

Click on the gif below for a live version:

Reinforcementlearningisexciting. It also is quite difficult. Knowing both of these things to be true, I wanted to find a way to use Unity’s ML-Agents reinforcement learning framework to train neural networks for use on the web with TensorFlow.js (TFJS).

Why do this? Specifically, why use Unity ML Agents rather than training the models in TFJS directly? After all, TFJS currently has at least twoseparate examples of reinforcement learning, each capable of training in the browser with TFJS directly (or somewhat more practically, training with TensorflowJS’s Node.js backend). While there is something very exciting about the idea of training and using a reinforcement learning agent all in the browser, I had a lot of difficulty training a functional model using either of these examples. And my understanding of the intricacies of the RL algorithms in use (and how to optimize their respective hyper-parameters, etc.) is limited, so debugging these examples involved a deep dive into some pretty scary looking code.

So I turned to Unity’s ML Agents framework, which uses the Unity 3D development environment as host to various agent-based RL models. What is most exciting for me about this framework is that:

- it appears to have a lot of support and an exciting (open source) community developing around it,

- not having to build your own environment makes getting up and running with the agent-training pretty quick,

- it features at least two algorithms for RL training (Proximal Policy Optimization and Soft Actor Critic), many working examples, and a backend which allows for a lot of more advanced training techniques (i.e. curriculum learning)

Because it uses TensorFlow to train models, I thought it might be possible to export a model for TFJS pretty quickly. This was not the case, but hopefully my stumbling through it will help you. What follows is how I managed to convert a neural network model trained using reinforcement learning in Unity into a TensorFlow.js model for use in the browser.

Note that the information below may well be out of date by the time you are reading it. The Unity ML Agents repo seems to be changing pretty quickly, as does the TensorFlow.js repo. Think of this as a lesson in stubbornness rather than a strict guide. If you want to skip my process, and just read how to do this yourself, skip to “Attempt #2”

What is a “.nn” file?

When I downloaded the latest release of the ML Agents package, I noticed that all of the example models were stored as mysterious “.nn” files. It turns out that the Unity ML Agents framework now exports trained models as “.nn” files for use with Unity’s new Barracuda inference engine. All of the pre-trained example models were stored in this “.nn” format (which cannot be converted to TensorFlow.js format models). At this point, I thought this project might have reached a dead end :(, but I opened an issue on the Unity ML Agents github repo anyway asking for them to implement a Barracuda to TensorFlow Converter.

After another few hours reading through the Unity ML Agents codebase and messing about with the example scenes, however, I realized that Unity still uses TensorFlow for training. Once training is complete, it converts and exports the TensorFlow model to Barracuda ‘.nn’ format using a conversion script. Crucially, this function also exports a TensorFlow Frozen Graph (“.pb”), checkpoint and various other files. These are gitignored from Unity’s ML Agents repo, so in order to access them, it is necessary to train a model from scratch. After training, these files will be listed in your training output folder along with the “.nn” file:

Attempt #1: Convert Frozen Model to TFJS Model

My next attempt to get a working TFJS model from Unity was to directly convert the ‘.pb’ Frozen Graph format to TensorFlow.JS format using the TensorFlow.js Converter. Unfortunately, the converter utility deprecated the ‘Frozen Model’ format to focus their support on the harder, better, faster, stronger SavedModel format. What is the difference between a FrozenModel and a SavedModel? I don’t really know. So after another few hours of fooling about with Python virtual environments I managed to install an earlier release of TensorFlow.js (0.8.6) which supported converting a Frozen TensorFlow Model to a TensorFlow.js Model. Hurray!

Now I had to figure out what to input into the output_node_names parameter for the tensorflowjs_converter shell command. What is in an (output node) name? At this point, I had no idea, but I had found the file in the Unity ML Agents code which contains the export_model function responsible for exporting the TensorFlow Frozen Model and Unity Barracuda model. This function contained a line which defined target_nodes. This sounds pretty close to output_nodes, doesn’t it? Printing out these target nodes seemed like a fruitful next step.

Unity Ml

a brief aside:

I have to pause here to mention that in order to alter the ML-Agents python code (the part of the Unity ML-Agents platform which runs training with TensorFlow), it was necessary to install these python packages from the repo, rather than from the PyPi (i.e. ‘pip’), which involved a whole bunch more struggling with Python virtual environments and following this guide here.

I added a print(target_nodes) line to the export_model function, ran the mlagents-learn script again and found that the target_nodes were is_continuous_control,version_number,memory_size,action_output_shape,action. Next I plugged these into the TFJS converter script with the following command:

At this point, I was greeted with a new and exciting error! This felt like success:

Some searching found a quick and dirty solution, add a --skip_op_check=SKIP_OP_CHECK flag to the converter script parameters.

It worked! There was a new web-model folder with three files:

- weights_manifest.json, a human-readable JSON file and,

- tensorflowjs_model.pb, a non-human readable (binary data) file

- group1-shard1of1, a non-human readable (binary data) file

Hurray!

But wait… How do I load this into TFJS?

Loading Frozen TFJS Model in TFJS 0.15

Because I converted a Frozen Model into a TFJS model, loading it required the (deprecated) tf.loadFrozenModel function, which required downgrading to TFJS v < 1.0.0 This done, the following code loaded the model:

Unity Tensorflow Object Detection

At this point, I saw the javascript version of an error from before: Uncaught (in promise) Error: Tensorflow Op is not supported: AddV2. Rather than continue down the rabbit hole of working with older versions of TFJS, I took another tack: exporting a TensorFlow SavedModel from Unity, which could be converted to a TFJS model using the lastest version of the TFJS converter.

Attempt #2: Exporting a SavedModel from Unity

At this point, I was trying to export a SavedModel from Unity for use with the tensorflowjs_converter. This ended up being the approach which worked. Roughly, these were the required steps to export a SavedModel from Unity:

- Install Unity ML Agents according to development installation instructions (to allow changes to python code)

In ml-agents/mlagents/trainers/tf_policy.py script, add the following lines to the export_model function to export a TensorFlow SavedModel. Note that the required graph nodes are different for continuous action space (i.e. the agent takes action on float values) and discrete action space (i.e. the agent takes action on integer values), so you will need to uncomment the respective lines accordingly:

The exported SavedModel should be able to be converted to a web model by the latest tensorflowjs-converter (tfjs version 1.4.0, currently) using the following script (run from within the SavedModel folder):

You should now have a model.json file and one or more binary files (i.e. group1-shard1of1.bin)

another brief aside:

Unity ML Agents uses Tensorboard to monitor training progress. Tensorboard has a ‘graphs’ tab which shows a visual representation of all of the inputs and outputs to each node of a graph model. This was somewhat helpful for starting to understand which nodes were necessary to attach as inputs and outputs for a SavedModel. It looks like this:

This graph is not exported by default, but this functionality be added to the Unity codebase. An object called tf.summary.FileWriter is responsible for outputting the summaries. This object is located inside the ml-agents/mlagents/trainers/trainer.py. Inside of this file, a function called write_tensorboard_text calls this object and outputs the training summaries to file, to be read by Tensorboard. To include the graph output to this training summary, alter the write_tensorboard_text function to include the following:

The graph diagram should now be visible in Tensorboard.

Hosting / Running Inference on a Unity Model in TFJS:

There are many TFJS resources available online. I found it particularly helpful to look at ml5.js’source code to understand how to load and run inference on a model in TFJS. That said, I learned a few things along the way about running inference on a Unity model specifically which are included in the code below:

Tensorflowsharp

At this point, you should be able to generate inputs and get outputs from your Unity RL model in TFJS. :)! Here is an example of the 3D Balance Ball example scene in TFJS.

Tensorflowsharp Unity